The news of the Bonn and Bologna openings brought great enthusiasm among EuroHPC ACROSS project partners, looking forward to cooperating more closely with ECMWF.

ECMWF, in cooperation with domain experts including Max-Planck Institute (Hamburg, DE), Deltares (NL) and Neuropublic (GR), is leading the ACROSS partners in the development of the Hydro-Meteorological and Climatological use-case that will run on the ACROSS platform, co-designed by Links foundation (IT), Atos (FR), IT4I (CZ), Inria (FR) and Cini (IT) and will exploit EuroHPC computing resources, namely CINECA Leonardo and Lumi pre-Exascale supercomputers and IT4I Karolina Petascale machine.

Our goal is to demonstrate global 5km or better simulations with multiple concurrent in-place data post-processing applications (i.e. product generation, machine-learning inference) and low-latency support for downstream applications (e.g. regional downscaling, hydrological simulations). In-place post-processing is crucial in reducing the pressure on the IO system and scaling workflows to very-high resolutions. In the current operational system, roughly 70% of the data generated by IFS and stored in FDB is read back during the time-critical windows for product generation. This results in file system contention and a resultant slow-down of the numerical model.

We plan to achieve in-place post-processing by adopting MultIO software stack, developed as part of the MAESTRO project, and we will work on efficient exploitation of heterogeneous computing resources by experimenting with GPU-accelerated post-processing. This will improve end-to-end runtimes by reducing unnecessary data movements.

Data generated by global-scale Numerical Weather Prediction (NWP) models will be stored and made available to the downstream applications in the workflow. ECMWF will use the Fields DatBase (FDB) to approach this problem. The FDB is a domain-specific object store which efficiently stores and indexes NWP output according to semantic metadata.

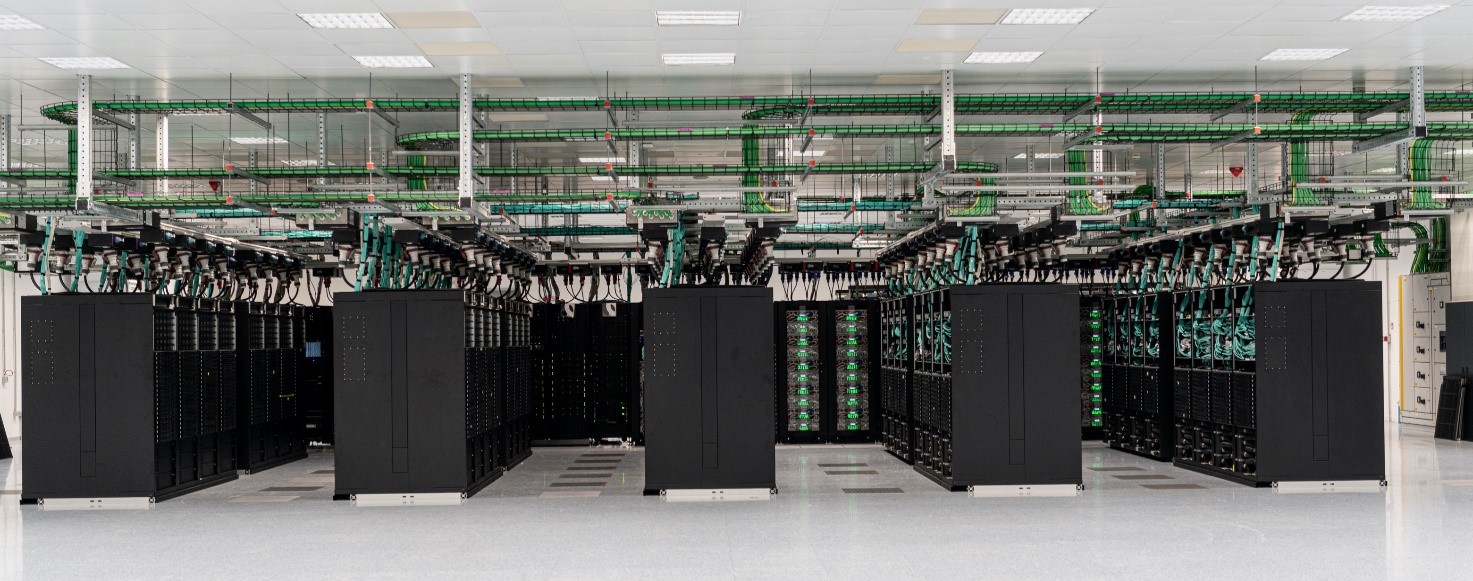

While versions of the FDB have been used in operations for decades, increases of data volume and velocity generated by high-resolution forecasts (5km or better resolution), and increased heterogeneity in the hardware landscape require further improvements. ECWMF is committed to improving the FDB to fully exploit the multi-layer data stores available on the EuroHPC computing resources, that may offer a combination of NVMe SSDs and traditional parallel file systems.

ACROSS infrastructure will provide an invaluable opportunity for testing at-scale such new technologies and support the ECMWF strategy. Moreover, ECMWF’s new data centre in Bologna and the CINECA Leonardo pre-exascale supercomputer will be side-by-side in the new Bologna Tecnopolo, we are really looking forward to benefiting from the synergies that such a close relationship will bring to ACROSS and Destination Earth, that will exploit Leonardo as one of the main computing resources.

Innovation within the ACROSS project is not limited to the meteorological domain; in cooperation with MPI and DKRZ, we are contributing to the use of the FDB in the ICON numerical model, adopted by MPI for climatological simulations. This joint effort will pave the way to closer collaboration between Meteorological and Climatological communities and simpler data interchange among different models in the complex ACROSS workflows. While we have always had good cooperation with MPI and DKRZ, we expect that the new Bonn site will provide additional opportunities for closer cooperation and collaboration.