In hydrological modelling, accurate simulations of hydrological processes not only help to provide reliable discharge simulations for operational water management (e.g. flood, drought), they also allow for a better understanding how changes in the future climate affect the hydrological response. With the increasing availability of (global) data at high spatial and temporal resolutions, it is vital that our hydrological models can be ran at these high spatial resolutions. However, increasing the spatial resolution of a hydrological model generally increases the computational load of running these models. Optimizing hydrological models can improve the usability and accessibility of running these models.

Therefore, one of the tasks in ACROSS WP6 is to improve the computational speed of the conceptual distributed hydrological model wflow_sbm (Imhoff et al, 2020, Eilander et al, 2021). Originally, the model code was written in Python, with strong dependencies on the PCRaster library (Karssenberg et al., 2010). In the latest version of the model (v0.5.2, https://github.com/Deltares/Wflow.jl; van Verseveld et al., 2022), we have translated all code to Julia, and no longer rely on PCRaster. As a result, we can fully benefit from the high performance of this relatively new programming language. Additionally, the Julia version of the model code supports multithreading, allowing the user to employ more of their computer’s resources.

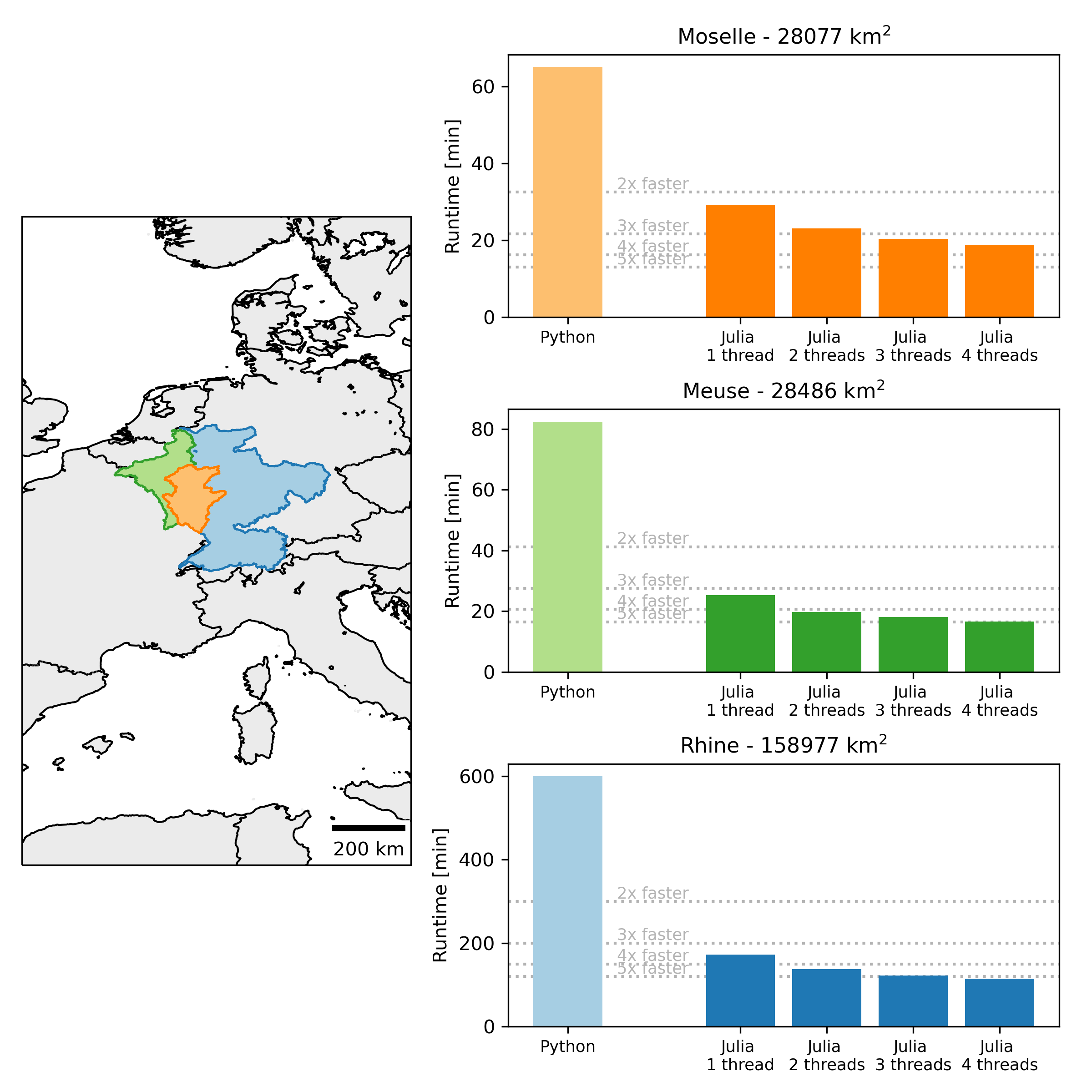

In the figure above, we compare the simulation times of the wflow_sbm model in three large river basins used by the Dutch government (RWS) for operational forecasting, risk assessment and climate impact studies. All I/O operations are excluded from the timing, to allow for a clean comparison between the Python and Julia versions of the model. The three models are built using HydroMT-wflow (Eilander et al., 2022) at a 0.008333 degree resolution (~1km) and a daily timestep and are forced with 5 years of ERA5 data (2000-2005). The simulations have been performed on a desktop with an Intel Xeon Gold 6144 CPU (with 4 cores, 4 threads exposed to the user) and 16GB RAM.

This figure highlights that the switch to Julia already resulted in substantial gains in simulation times, independent of the size of the simulated basin. When more threads are enabled, simulations times decrease even further, and lead to a model that is 4-5 times quicker in performing simulations than with the original Python code. While these are already substantial gains, we aim to further speed up the model code (through more enhanced parallelization and using MPI), to allow for simulations at even larger regions (e.g. Continental Europe) and/or even higher resolutions.

References

Eilander, D., Van Verseveld, W. J., Yamazaki, D., Weerts, A. H., Winsemius, H. C., and Ward, P. J. (2021). A hydrography upscaling method for scale-invariant parametrization of distributed hydrological models. Hydrology and Earth System Sciences, 25(9), 5287-5313. https://doi.org/10.5194/hess-25-5287-2021

Eilander, D., Boisgontier, H., van Verseveld, W. J., Bouaziz, L., and Hegnauer, M. (2022). hydroMT-wflow (v0.1.4). Zenodo. https://doi.org/10.5281/zenodo.6221375

Imhoff, R. O., van Verseveld, W. J., van Osnabrugge, B., and Weerts, A. H. (2020). Scaling Point-Scale (Pedo)transfer Functions to Seamless Large-Domain Parameter Estimates for High-Resolution Distributed Hydrologic Modeling: An Example for the Rhine River. Water Resources Research, 56, e2019WR026807. https://doi.org/10.1029/2019WR026807.

Karssenberg, D., Schmitz, O., Salamon, P., de Jong, K., and Bierkens, M. F. P. (2010). A software framework for construction of process-based stochastic spatio-temporal models and data assimilation, Environmental Modelling & Software 25(4), 489-502. https://doi.org/10.1016/j.envsoft.2009.10.004.

van Verseveld, W. J., Visser, M., Bootsma, H., Boisgontier, H., and Bouaziz, L. (2022). Wflow.jl (v0.5.2). Zenodo. https://doi.org/10.5281/zenodo.5958258