Aiming for improved projections and a better understanding of important climate processes, climate modelling strives for ever higher resolutions in its simulations in space and in time. Accordingly, the amount of data handled and produced by competitive Earth system models are highly increasing, posing a complex challenge to not only the hardware but also to the software used in this context. Whereas computing power has become cheaper and more readily available in recent decades, input-output infrastructures have to keep up with the data generated. Weather and climate forecasting is one of the three pilot projects in the ACROSS project. In it, the Max-Planck Institute for Meteorology (MPI-M), which operates a modern, competitive Earth system model, works on improving its data management workflow, especially for large ensembles of experiments as well as the storm-resolving model runs.

The ICON model

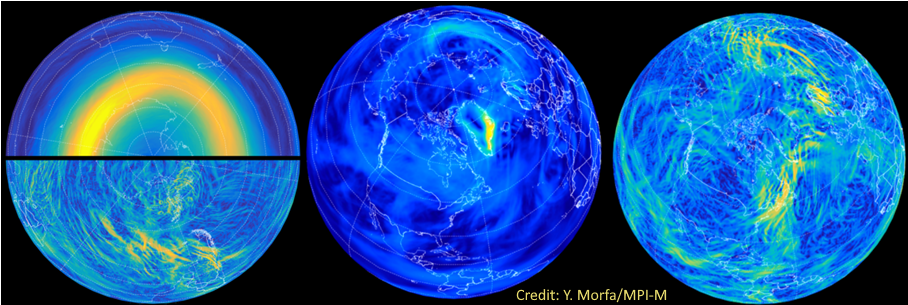

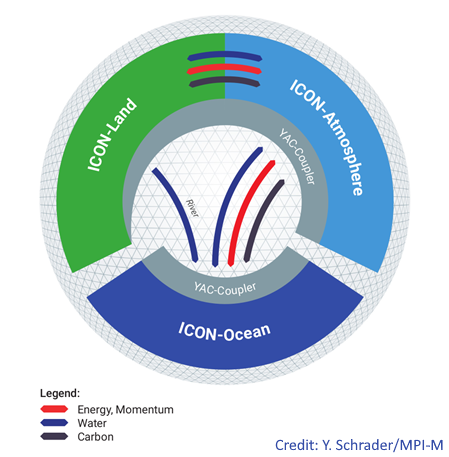

The ICON (ICOsahedral Nonhydrostatic) model is developed in a continuous collaboration among MPI‐M, the German Weather Service (DWD), the German Climate Computing Center (DKRZ), and the Karlsruhe Institute of Technology (KIT). Its weather forecasts are used in more than 30 national weather services as lateral boundary conditions for limited-area forecasts.

Although ICON was born mainly for numerical weather prediction, ICON is the compilation of sub-models for the atmosphere, land surface and ocean circulation models that can be used separately or in different combinations in a coupled model. As it integrates a wide variety of aspects of the global Earth system (e.g. physics, chemistry, biogeochemistry), it is a central tool for assessing different scenarios and climate simulations. Currently, many researchers at MPI-M and other research institutions employ ICON regarding a variety of research questions, from slow low-resolution long-timescale processes to quick high-resolution short timescale ones. It has been a central element of various high-end scientific publications.

The BORGES data system

In order to efficiently handle and organize the vast amount of data produced by the ICON model, MPI-M will develop and evaluate the large-scale data system, Better ORGanized Earth System model data (BORGES), in the course of the ACROSS project. Whereas the ICON model constitutes the main data sources in BORGES, this data is stored in the Field DataBase (FDB) as the major sink for stream data with several back-ends for actual storage. FDB has been developed at ECMWF as a domain-specific object-store designed for fast archival and retrieval of meteorological and climatological data.

As its main task, the BORGES data system ensures the consistency of all data stored in it, between metadata such as model parameters to ensure easy reproducibility and the large data streams of model output. For High-Performance Data Analytics these streams can additionally be distributed to further post-processing tasks. Regarding data retrieval, BORGES will provide easy, semantic and efficient access to the data kept in it to help climate researchers in their everyday work. As part of their research workflow, it has to account for the variety of needs by numerous users.

In its data flow, BORGES tries to find an efficient compromise between a centralized database architecture and computing jobs distributed on the High-Performance Computing (HPC) cluster. It is centralized in the sense that access to the system is only possible via the defined interfaces (e.g., a shared library would not fulfil this requirement). However, the processes for this interface are distributed among user HPC jobs as well as classical services on virtual or dedicated hardware servers.

In the next two years as part of the ACROSS project, MPI-M will build and evaluate this system in several HPC environments, partially on specialized storage hardware. We are confident that BORGES will prove to be highly useful for climate researchers, not only at MPI-M but also across the weather and climate community.